It’s time for a dialogue among researchers, funders, publishers and others to achieve shared understanding of “impact”.

I In October last year I attended a seminar entitled “Publish and Perish?”, organised by the Young Academy of Sweden. Among other topics, six experts discussed how scientific research is, and should be, evaluated.

Several of the experts felt strongly that the journal impact factor is ill-suited to evaluate the research performance of individual scientists. They felt that relying on this factor and other bibliometrics runs the risk of stifling innovation and encouraging excessive caution while choosing research topics.

A recent peer-reviewed paper in Studies in Higher Education provides another twist on impact. The study, which focused on UK and Australian academics, found that researchers tend to exaggerate the non-academic impact of their work when they apply for grants. Summarising the study, an article on the Times Higher Education website quoted an Australian academic as wondering, “I don’t know what you’re supposed to say, something like ‘I’m Columbus, I’m going to discover the West Indies?!’”

What, then, is impactful research? How do we measure impact? Who decides on these issues? Many researchers are at a loss. Impact isn’t the sole concern of academia, of course. Government departments or companies, for example, are also concerned about the impact of their policies or products. Could we gain some clarity from how such entities understand and measure impact? A February 2016 working paper that I stumbled on last week is encouraging in that respect.

This working paper, conveniently entitled “What is impact?”, was published by the Overseas Development Institute – an independent, UK-based think tank on international development and humanitarian issues. The paper’s premise is that the way impact is framed affects significantly the development processes and the design, management and evaluation of programmes. This has strong parallels with academia: indeed, the framing of impact influences how research is funded, conducted and evaluated.

Many of the paper’s observations regarding the development community seem to hold just as well for academia too. For example, there is no shared definition of what impact is: multiple understandings and interpretations are the norm, varying between the very specific to the very broad. Moreover, there is debate on the methodologies for measuring impact. Instead of focusing on such debates, however, the paper explores who is defining impact and how development is being judged.

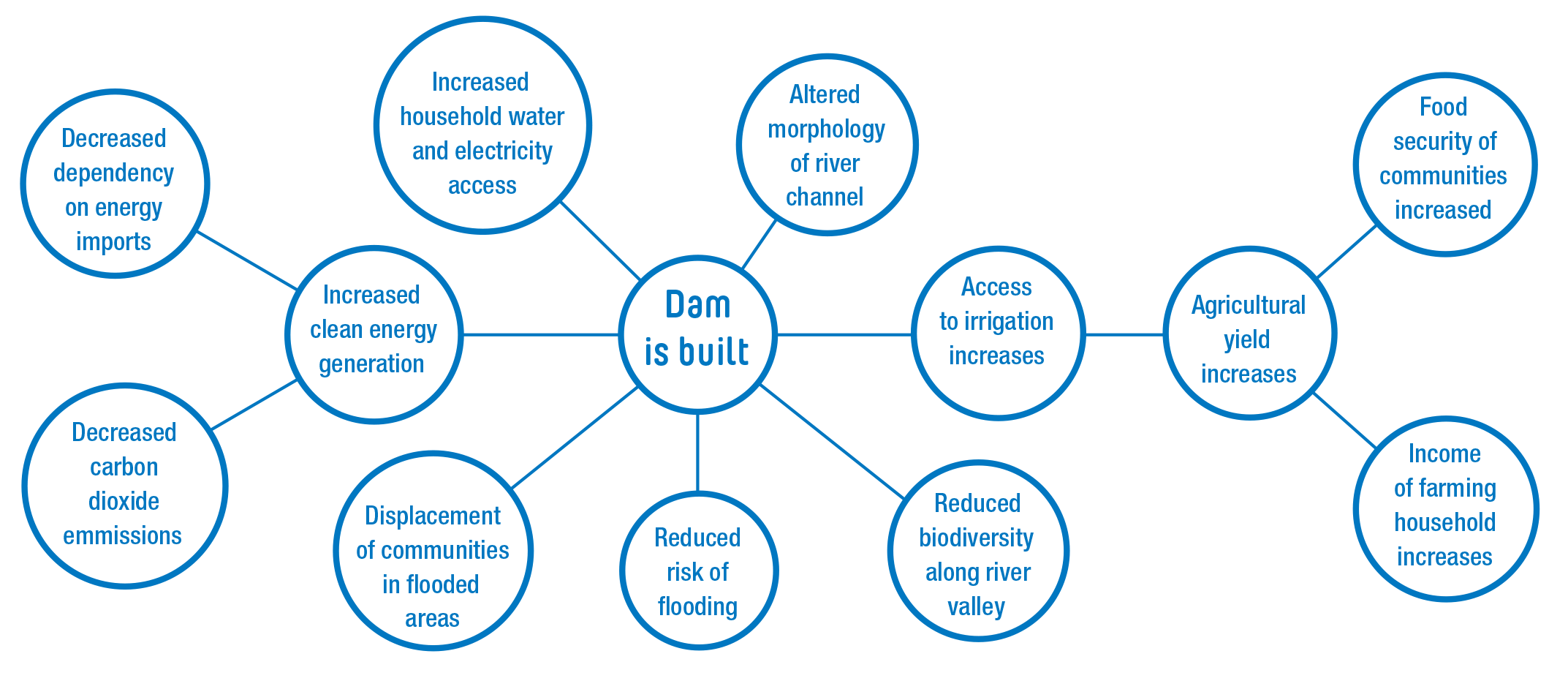

The paper lists six characteristics of impact to help clarify what it may mean in the context of development: application; scope; subject and level of change; degrees of separation; immediacy, rate and durability of change; and homogeneity of benefits. Degrees of separation, for example, has to do with how direct the link between intervention and its outcome is.

The paper calls on development programmes to engage various stakeholders in a dialogue about how impact is used and understood. This might then lead to shared understanding. I believe this is something academia should consider too. The relevant stakeholders might include individual academics; the institutions they work for; scientific unions, organisations and academies; research funding agencies; government departments; academic publishers; and consultancies like Elevate. Perhaps funding agencies could take the lead?

Shared understanding based on dialogue could help address the bafflement expressed by individual academics such as the Australian researcher quoted above. Funding agencies could develop more precise, context-specific templates of statements of impact. Applicants could approach such statements with greater assurance instead of succumbing to the pressure of competition and feeling tempted to exaggerate. Similarly, sustained conversations among universities, researchers and publishers might help to come up with nuanced criteria – that go beyond the journal impact factor – for evaluating research.

This discussion has already begun as indicated by, for example, the Young Academy seminar, reports such as The Metric Tide and the experimentation with pre-print servers and post-publication review. But much of this is bottom-up and uncoordinated: although this has its strengths, I believe this could be complemented by a more deliberate and sustained dialogue among the stakeholders. Not only in the Western world but also in other parts of the world including China, India, Latin America and Africa.

We at Elevate look forward to contributing as well as, possibly, mediating this dialogue.

Ninad is an alumni of Elevate Scientific, having been its Managing Editor between 2016-2020.